Using Cloudera Streams Messaging Manager for Apache Kafka Monitoring, Management, Analytics and CRUD

SMM is powerful tool to work with Apache Kafka and provide you with monitoring, management, analytics and creating Kafka topics. You will be able to monitor servers, brokers, consumers, producers, topics and messages. You will also be able to easily build alerts based on various events that can occur with those entities.

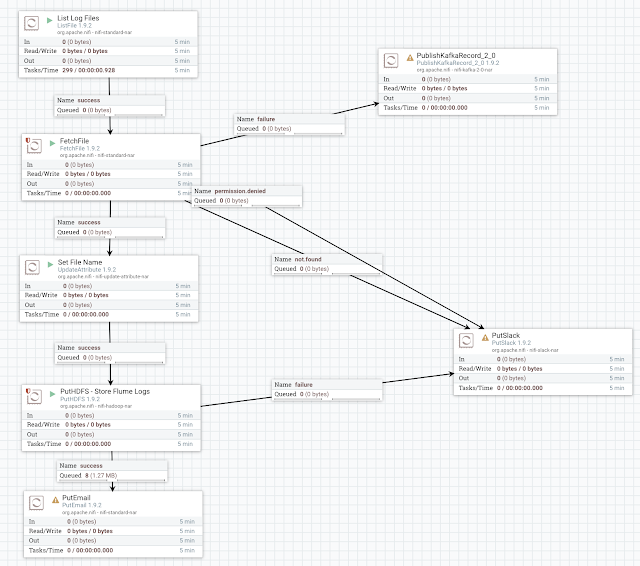

From Cloudera Manager, we can now install and manage Kafka, SMM, NiFi and Hadoop services.

Let's create a Kafka topic, no command-line!

For a simple topic, we select Low size for replication factor of one and replica count of one. We also set a cleanup policy of delete.

Let's create an alert.

For this one if the nifi-reader consumer group has a lag then send an email to me.

Let's browse our Kafka infrastructure in our AWS Cloudera Kafka cluster, so easy to navigate.

You can dive into a topic and see individual messages, see offsets, keys, values, timestamps and more.

Zoom into one message in a topic.

Let's analyze a topic's configuration.

Example Alert Sent

Notification id: 56d35dcc-8fc0-4c59-b70a-

Root resource name: nifi-reader,

Root resource type: CONSUMER,

Created timestamp: Thu Aug 22 18:42:41 UTC 2019 : 1566499361366,

Last updated timestamp: Thu Aug 22 18:42:41 UTC 2019 : 1566499361366,

State: RAISED,

Message:

Alert policy : "ALERT IF ( CONSUMER (name="nifi-reader") CONSUMER_GROUP_LAG >= 100 )" has been evaluated to true Condition : "CONSUMER_GROUP_LAG>=100" has been evaluated to true for following CONSUMERS - CONSUMER = "nifi-reader" had following attribute values * CONSUMER_GROUP_LAG = 139

Software

- CSP 2.1

- CDH 6.3.0

- Cloudera Schema Registry 0.80

- CFM 1.0.1.0

- Apache NiFi Registry 0.3.0

- Apache NiFi 1.9.0.1.0

- JDK 1.8

Resources

- https://www.cloudera.com/products/cdf/csp.html

- https://docs.hortonworks.com/HDPDocuments/CSP/CSP-1.0.0/index.html

- https://docs.hortonworks.com/HDPDocuments/SMM/SMM-1.2.1/monitoring-kafka-clusters/content/smm-monitoring-clusters.html