Search This Blog

AI - Apache Flink - Apache Kafka - Apache Iceberg - Apache Polaris - Apache NiFi - Streaming - IoT - Data Enginwering - SQL - RAG - @PaaSDev

Posts

Updating Machine Learning Models At The Edge With Apache NiFi and MiNiFi

- Get link

- X

- Other Apps

Using Cloudera Streams Messaging Manager for Apache Kafka Monitoring, Management, Analytics and CRUD

- Get link

- X

- Other Apps

EFM Series: Using MiNiFi Agents on Raspberry Pi 4 with Intel Movidius Neural Compute Stick 2, Apache NiFi and AI

- Get link

- X

- Other Apps

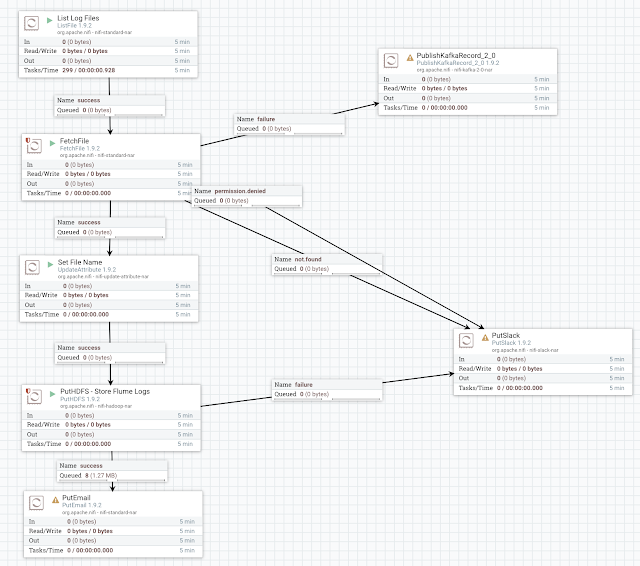

Migrating Apache Flume Flows to Apache NiFi: Log to HDFS

- Get link

- X

- Other Apps